In data analytics, ensuring data quality is crucial for accurate and reliable insights. Duplicates, whether from system errors, merging datasets, or manual entry, can severely compromise your analysis and lead to misleading conclusions. If left unchecked, these duplicates can distort key metrics, create inconsistencies in reports, and result in faulty decision-making.

For instance, duplicate customer records could inflate sales figures, or repeated entries in a dataset could skew averages and totals. This can lead decision-makers to make misguided choices, potentially costing the business time, money, and resources.

Properly handling duplicates is essential to maintaining the integrity of your data. Power BI offers powerful tools to manage duplicates effectively, from using the Power Query Editor for data transformation, to DAX functions for dynamic calculations, and applying filters directly in reports to maintain accuracy without altering the underlying data.

By regularly cleaning your datasets and using best practices like unique identifiers, you ensure that your analytics are built on solid, trustworthy data.

In this comprehensive guide, we will explore various methods to identify, manage, and remove duplicates in Power BI, ensuring the integrity of your data. From using Power Query Editor and DAX functions to applying filters directly in reports, you will learn practical approaches to manage duplicates effectively. Additionally, we’ll cover best practices, common challenges, advanced techniques, and real-world examples to help you master data cleaning in Power BI.

Understanding Duplicates in Power BI

Before diving into the methods of removing duplicates, it’s essential to understand what duplicates are and why they can pose significant problems in data analysis.

What Are Duplicates?

Duplicates refer to rows or records that contain identical values in one or more fields. They can arise from various sources, including system issues during data import, errors during manual data entry, or merging datasets from different sources without proper cleansing.

In business intelligence tools like Power BI, duplicates can significantly distort data visualizations and negatively impact the accuracy of key performance indicators (KPIs). For instance, consider a dataset containing customer information. If two entries have the same name, email, and address, they would be classified as duplicates. When these records are counted twice, it can inflate metrics such as total customer count, revenue figures, or engagement rates.

This inflation can lead decision-makers to draw incorrect conclusions, potentially affecting business strategies and operations. For example, if a company believes it has acquired a certain number of new customers based on inflated figures, it might overestimate its market growth, leading to misguided marketing strategies or resource allocation.

To mitigate the risks associated with duplicates, it’s essential to implement effective data management practices. Regularly auditing datasets, utilizing unique identifiers, and employing tools within Power BI—such as Power Query Editor to identify and remove duplicates—are vital steps in ensuring data integrity. By proactively managing duplicates, organizations can enhance the reliability of their data analytics, leading to more informed decision-making and better overall business outcomes.

Examples of Duplicates:

- Customer Data: Two or more entries with the same customer name and contact information could lead to inflated customer counts and misrepresent the actual number of customers.

- Sales Data: Duplicates in sales transactions could lead to inflated revenue numbers, potentially causing inaccurate financial reporting.

- Employee Records: Multiple records for the same employee with identical details can result in incorrect headcounts and skew payroll data.

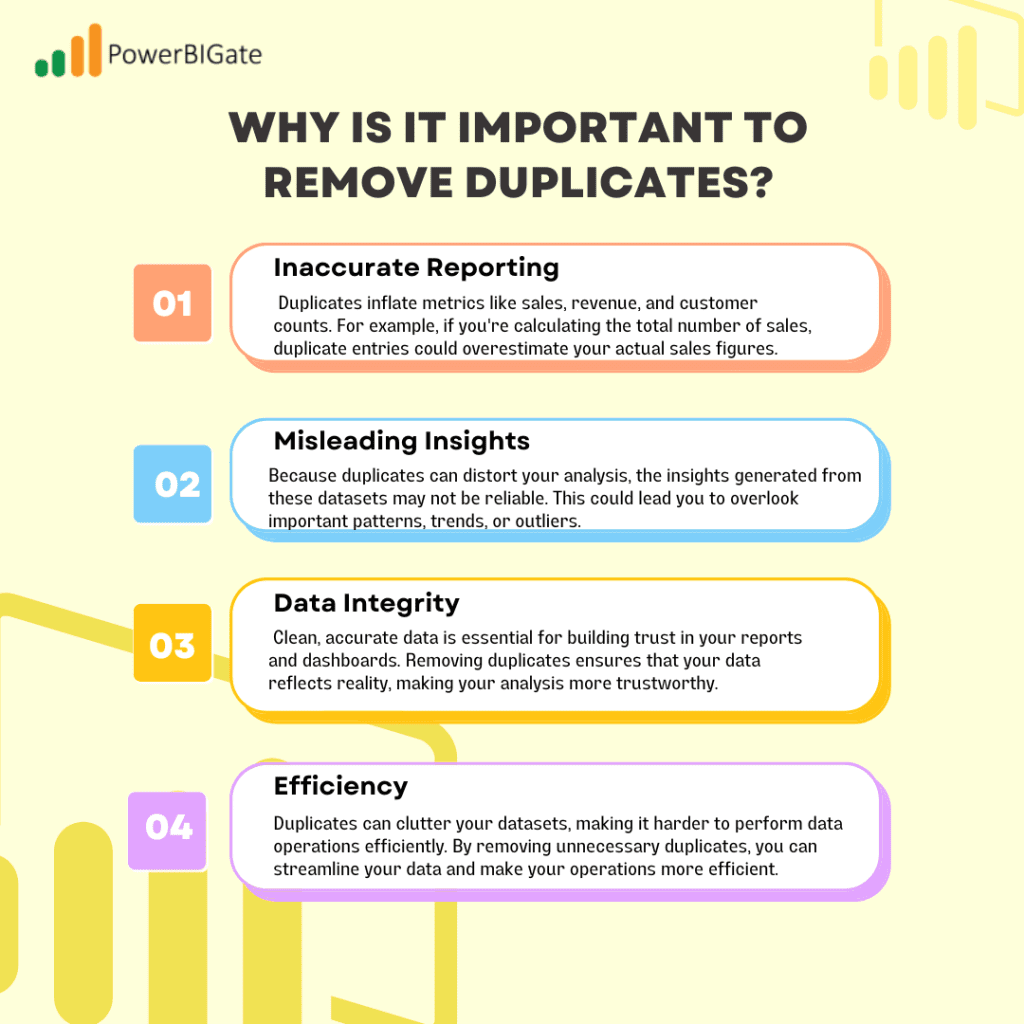

Why Is It Important to Remove Duplicates?

The presence of duplicates can cause the following issues:

- Inaccurate Reporting: Duplicates inflate metrics like sales, revenue, and customer counts. For example, if you’re calculating the total number of sales, duplicate entries could overestimate your actual sales figures.

- Misleading Insights: Because duplicates can distort your analysis, the insights generated from these datasets may not be reliable. This could lead you to overlook important patterns, trends, or outliers.

- Data Integrity: Clean, accurate data is essential for building trust in your reports and dashboards. Removing duplicates ensures that your data reflects reality, making your analysis more trustworthy.

- Efficiency: Duplicates can clutter your datasets, making it harder to perform data operations efficiently. By removing unnecessary duplicates, you can streamline your data and make your operations more efficient.

Identifying and Removing Duplicates in Power BI

Power BI offers a variety of methods to handle and remove duplicates, from using Power Query Editor to advanced DAX functions. Each method has its advantages and is suitable for different scenarios. Below, we’ll break down the most effective techniques.

Method 1: Using Power Query Editor

Power Query Editor is one of the most powerful tools available in Power BI for transforming and cleaning your data. With Power Query, you can easily remove duplicates from one or more columns with just a few clicks. This method is particularly useful when you want to perform data transformations before loading the data into your model.

Step-by-Step Process to Remove Duplicates in Power Query Editor:

- Open Power BI Desktop: First, launch Power BI Desktop and load your dataset by clicking on the Get Data button. Import your data from any source (Excel, SQL Server, etc.).

- Access Power Query Editor: Once your data is loaded, go to the Home tab and click on Transform Data. This will open Power Query Editor, where you can apply transformations to your dataset.

- Select Columns with Duplicates: In the Power Query Editor window, find the column(s) that contain duplicate values. For example, if you’re working with customer data, you may want to remove duplicates in the Customer ID or Email Address columns.

- Remove Duplicates: To remove duplicates, right-click the column header of the selected column(s) and choose Remove Duplicates from the context menu. Power Query will automatically remove rows with duplicate values in the selected columns.

- Apply Changes: After cleaning the data, click Close & Apply to confirm the changes and load the transformed data into Power BI. You can now use the clean dataset in your reports and dashboards.

Visualizing Before and After Removing Duplicates:

Before applying duplicate removal, create a table visual to see how many duplicate entries you have. After applying the transformation, use the same visual to check how many records remain. This simple exercise allows you to compare the difference and ensures that the duplicates were successfully removed.

Method 2: Using DAX Functions to Remove Duplicates

While Power Query Editor is the go-to tool for cleaning data before loading it into your model, DAX (Data Analysis Expressions) offers another way to manage duplicates. DAX functions can be used to create calculated tables that filter out duplicates dynamically, which can be particularly useful for scenarios where you need to retain the original dataset but want to create a separate table with only unique records.

Example DAX Formula for Removing Duplicates:

DAX

UniqueTable = DISTINCT(YourTableName)

This DAX formula creates a new table called UniqueTable, which contains only the unique records from the original table, YourTableName. This approach allows you to keep the original table intact while creating a new table that excludes duplicates.

Step-by-Step Process for Using DAX to Remove Duplicates:

- Create a New Table: In Power BI, click on the Modeling tab and select New Table. This will allow you to create a new table using a DAX formula.

- Enter DAX Formula: In the formula bar, type the DAX formula above to create a new table that only contains unique records.

- Use the New Table in Visuals: Once the new table is created, you can use it in your visuals and reports. This method ensures that duplicates are excluded from your analysis, while the original dataset remains available for reference.

Advanced DAX Techniques:

You can also use the REMOVEFILTERS function in combination with DISTINCT to create even more complex tables. For instance, you might want to remove duplicates based on certain criteria or filters. Here’s an example of a more advanced DAX formula:

DAX

FilteredUniqueTable = CALCULATETABLE(DISTINCT(YourTableName), REMOVEFILTERS(YourTableName[ColumnToFilter]))

This formula creates a new table with unique records, while also removing filters from a specific column.

Use Cases for DAX-Based Duplicate Removal:

- Creating Summary Tables: You can use DAX to create summary tables that exclude duplicates and display aggregated metrics, such as sales totals, customer counts, or revenue breakdowns.

- Dynamic Data Models: When working with dynamic data models that are frequently updated, DAX allows you to create unique tables on the fly without altering the original data.

Method 3: Filtering Duplicates in Reports

Sometimes, you may not want to permanently remove duplicates from your dataset but still need to filter out duplicate values in your reports. Power BI allows you to apply filters directly in visuals, which gives you flexibility in how you handle duplicates without altering the underlying dataset.

Steps to Apply Filters in Reports:

- Create a Visual: First, create a visual by dragging and dropping fields into a chart, table, or matrix.

- Apply Filters: In the visual’s filters pane, apply a filter to display only unique values. For example, if you’re displaying customer data, filter the Customer ID field to only show unique entries.

- Maintain Original Data: This method lets you visualize unique records without permanently altering the underlying dataset, which can be useful in cases where you need to preserve the original data for other reports or analyses.

Use Cases for Report-Based Filtering:

- Dashboard Reporting: When creating dashboards, you might need to show only unique records for a specific metric while still keeping the original data intact.

- Ad-hoc Analysis: If you’re conducting a quick analysis and don’t want to remove duplicates permanently, applying filters in reports can be an efficient way to handle duplicates without data loss.

Best Practices for Managing Duplicates in Power BI

To manage duplicates effectively in Power BI, it’s important to follow best practices. These practices ensure that your data remains clean and that your analysis is both accurate and efficient.

1. Regular Data Cleaning

Frequent data imports from multiple sources increase the risk of duplicates. As a best practice, schedule regular data cleaning processes to ensure your datasets remain free of duplicate records. You can automate this process in Power BI by scheduling data refreshes combined with transformations in Power Query.

2. Use Unique Identifiers

Unique identifiers, such as Customer ID, Order ID, or Transaction ID, are key to managing duplicates. Whenever possible, ensure that each record has a unique identifier to make it easier to spot and remove duplicates.

3. Document Your Data Sources

Maintaining proper documentation of your data sources and the transformations applied to them can help you track where duplicates might arise. This is especially important when merging datasets from different sources, as duplication is a common issue during such processes.

Common Challenges When Removing Duplicates

Although removing duplicates is a straightforward process, it can present some challenges that you need to be aware of.

1. Loss of Important Data

One of the biggest risks when removing duplicates is accidentally deleting important data. For instance, if two records have the same Customer ID but different Phone Numbers, removing duplicates based solely on the Customer ID could result in losing one of the phone numbers.

2. Performance Issues

When dealing with large datasets, removing duplicates can sometimes cause performance issues, especially when using complex DAX formulas or transformations in Power Query. Power BI may take longer to process data when duplicate removal is applied to large tables.

3. Handling Partial Duplicates

Not all duplicates are exact matches. Sometimes, records may contain duplicate values in one field (e.g., customer name) but differ in others (e.g., email address or phone number). Deciding how to handle these partial duplicates can be challenging. In such cases, it’s essential to define clear rules for what constitutes a duplicate and how to resolve them.

Advanced Techniques for Removing Duplicates

In addition to the basic methods we’ve covered, there are more advanced techniques that you can use to handle duplicates in Power BI. These techniques are especially useful for large-scale data projects or complex data models.

1. Merging and Deduplicating Data from Multiple Sources

If you’re working with data from multiple sources (e.g., combining sales data from different regions), you may encounter duplicates during the merging process. Power BI allows you to use Merge Queries in Power Query to join datasets and deduplicate records simultaneously.

Step-by-Step Process for Merging and Removing Duplicates:

- Open Power Query Editor: In Power BI, navigate to Transform Data to access Power Query Editor.

- Merge Queries: Use the Merge Queries option to combine two or more tables. Ensure that you select the correct join type (e.g., Inner Join, Left Join) to match the records appropriately.

- Remove Duplicates: After merging the queries, use the Remove Duplicates function on the relevant columns to eliminate duplicate records.

2. Using R or Python Scripts for Data Cleaning

Power BI allows you to integrate R or Python scripts into your reports. If you need to perform more complex data cleaning tasks, such as removing duplicates based on advanced conditions, you can use these programming languages.

For example, an R script to remove duplicates based on custom logic might look like this:

R

library(dplyr)

clean_data <- my_data %>% distinct(CustomerID, .keep_all = TRUE)

This script removes duplicates while keeping all the columns of the first occurrence.

3. Handling Duplicate Rows with Custom Logic

In some cases, you may want to apply custom logic to determine which duplicates to keep and which to remove. For example, if two sales records have the same Order ID, but one record has more recent information (e.g., an updated delivery address), you might want to keep the latest record.

You can use Power Query’s Conditional Columns or DAX’s IF functions to apply custom logic when removing duplicates. Here’s an example of how you could use DAX to keep the latest record based on a date field:

DAX

LatestOrders =

CALCULATETABLE(

Orders,

Orders[OrderDate] = MAXX(FILTER(Orders, Orders[OrderID] = EARLIER(Orders[OrderID])), Orders[OrderDate])

)

This DAX formula returns a table containing only the latest orders for each Order ID based on the OrderDate field.

Conclusion: Managing Duplicates in Power BI for Reliable Data Analysis

Removing duplicates in Power BI is essential for maintaining data integrity and accuracy. Duplicates can skew reports, distort insights, and lead to incorrect conclusions. Fortunately, Power BI offers multiple methods for handling duplicates, each suited to different scenarios.

- Power Query Editor: This tool allows for easy data transformation before loading it into your model. You can quickly remove duplicates from one or multiple columns, ensuring a clean dataset.

- DAX Functions: For more dynamic calculations, DAX formulas like DISTINCT() help create tables with unique records without altering the original data.

- Report Filtering: If you need to maintain the original data but display only unique values, applying filters in reports is a flexible approach.

To master duplicate management in Power BI:

- Regularly clean your datasets to prevent duplicate buildup.

- Use unique identifiers like IDs for easier detection of duplicates.

- Employ advanced techniques like merging queries and applying custom logic when handling more complex cases.

By following these best practices, you can confidently remove duplicates, ensuring that your reports are accurate, reliable, and free from skewed data.

As we wrap up this comprehensive guide, we encourage you to reflect on your own experiences with managing duplicates. How do you handle duplicate data in your projects? Share your strategies and tips in the comments below!